A continuación muestro un ejemplo de Clasificación de Clientes para Identificar la Deserción de clientes lo que tambien se conoce como CHURN.

Estos ejemplos / casos / tareas son tomados del curso de Redes Neuronales Artificiales en los Negocios de la Maestria en Ciencia de Datos de la Universidad Ricardo Palma a cargo del Ing. Mirko Rodriguez.

Para este ejemplo usaremos:

- Red Neuronal con Keras y TensorFlow

- Tipo de Aprendizaje Supervisado

- Algoritmo de Aprendizaje Adam

Todo el código mostrado a continuación a sido probado en Google Colab sobre Python 3.X

Empezamos descargando la data en csv hosteado en fragote.com que utilizaremos:

%%bash

if [ ! -f "clientes_data.csv" ]; then

wget www.fragote.com/data/clientes_data.csv

fi

ls -l

Agregamos la funciones necesarias para poder hacer nuestro modelo predictivo / análisis:

# Funciones

import numpy as np

from sklearn.metrics import confusion_matrix

from sklearn.utils.multiclass import unique_labels

from sklearn.externals import joblib

import seaborn as sns

import matplotlib.pyplot as plt

def plot_confusion_matrix(y_true, y_pred,

normalize=False,

title=None):

"""

Esta función imprime y traza la matriz de confusión.

La normalización se puede aplicar configurando `normalize=True`.

"""

if not title:

if normalize:

title = 'Matriz de Confusión Normalizada'

else:

title = 'Matriz de Confusión sin Normalizar'

# Calculando la Matriz de Confusion

cm = confusion_matrix(y_true, y_pred)

# solo usar las etiquetas que se tienen en la data

classes = unique_labels(y_true, y_pred)

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

print("Matriz de Confusión Normalizada")

else:

print('Matriz de Confusión sin Normalizar')

print(cm)

fig, ax = plt.subplots()

im = ax.imshow(cm, interpolation='nearest', cmap=plt.cm.Blues)

ax.figure.colorbar(im, ax=ax)

ax.grid(linewidth=.0)

# Queremos mostrar todos los puntos...

ax.set(xticks=np.arange(cm.shape[1]),

yticks=np.arange(cm.shape[0]),

# ... etiquetando la lista de datos

xticklabels=classes, yticklabels=classes,

title=title,

ylabel='True label',

xlabel='Predicted label')

# rotando las etiquedas de los puntos.

plt.setp(ax.get_xticklabels(), rotation=45, ha="right",rotation_mode="anchor")

# Loop over data dimensions and create text annotations.

fmt = '.2f' if normalize else 'd'

thresh = cm.max() / 2.

for i in range(cm.shape[0]):

for j in range(cm.shape[1]):

ax.text(j, i, format(cm[i, j], fmt),

ha="center", va="center",

color="white" if cm[i, j] > thresh else "black")

fig.tight_layout()

plt.show()

return ax

def saveFile(object_to_save, scaler_filename):

joblib.dump(object_to_save, scaler_filename)

def loadFile(scaler_filename):

return joblib.load(scaler_filename)

def plotHistogram(dataset_final):

dataset_final.hist(figsize=(20,14), edgecolor="black", bins=40)

plt.show()

def plotCorrelations(dataset_final):

fig, ax = plt.subplots(figsize=(10,8)) # size in inches

g = sns.heatmap(dataset_final.corr(), annot=True, cmap="YlGnBu", ax=ax)

g.set_yticklabels(g.get_yticklabels(), rotation = 0)

g.set_xticklabels(g.get_xticklabels(), rotation = 45)

fig.tight_layout()

plt.show()

Preprocesando los datos del archivo csv:

# Importando librerías

import pandas as pd

import numpy as np

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

# Importando Datasets

dataset_csv = pd.read_csv('clientes_data.csv')

# Columnas de la data

print ("\nColumnas del DataSet: ")

print (dataset_csv.columns)

# Describir la data original

print ("\nDataset original:\n", dataset_csv.describe(include='all'))

# Dataset reducido

dataset = dataset_csv.iloc[:,3:14]

dataset_columns = dataset.columns

dataset_values = dataset.values

# Describir la data truncada

print ("\nDataset reducido: ")

print("\n",dataset.head())

# Revisamos los tipos de datos de las Columnas

print ("\nTipos de Columnas del Dataset: ")

print(dataset.dtypes)

Columnas del DataSet:

Index(['NumeroFila', 'DNI', 'Apellido', 'ScoreCrediticio', 'Pais', 'Genero',

'edad', 'Tenure', 'Balance', 'NumDeProducts', 'TieneTarjetaCredito',

'EsMiembroActivo', 'SalarioEstimado', 'Abandono'],

dtype='object')

Dataset original:

NumeroFila DNI ... SalarioEstimado Abandono

count 10000.00000 1.000000e+04 ... 10000.000000 10000.000000

unique NaN NaN ... NaN NaN

top NaN NaN ... NaN NaN

freq NaN NaN ... NaN NaN

mean 5000.50000 1.569094e+07 ... 100090.239881 0.203700

std 2886.89568 7.193619e+04 ... 57510.492818 0.402769

min 1.00000 1.556570e+07 ... 11.580000 0.000000

25% 2500.75000 1.562853e+07 ... 51002.110000 0.000000

50% 5000.50000 1.569074e+07 ... 100193.915000 0.000000

75% 7500.25000 1.575323e+07 ... 149388.247500 0.000000

max 10000.00000 1.581569e+07 ... 199992.480000 1.000000

[11 rows x 14 columns]

Dataset reducido:

ScoreCrediticio Pais Genero ... EsMiembroActivo SalarioEstimado Abandono

0 619 France Female ... 1 101348.88 1

1 608 Spain Female ... 1 112542.58 0

2 502 France Female ... 0 113931.57 1

3 699 France Female ... 0 93826.63 0

4 850 Spain Female ... 1 79084.10 0

[5 rows x 11 columns]

Tipos de Columnas del Dataset:

ScoreCrediticio int64

Pais object

Genero object

edad int64

Tenure int64

Balance float64

NumDeProducts int64

TieneTarjetaCredito int64

EsMiembroActivo int64

SalarioEstimado float64

Abandono int64

dtype: objectCodificamos las columnas de caracteres a números, Genero / Pais

#Codificando datos categóricos:

labelEncoder_X_1 = LabelEncoder()

dataset_values[:, 1] = labelEncoder_X_1.fit_transform(dataset_values[:, 1])

labelEncoder_X_2 = LabelEncoder()

dataset_values[:, 2] = labelEncoder_X_2.fit_transform(dataset_values[:, 2])

print ("\nDataset Categorizado: \n", dataset_values)

Dataset Categorizado:

[[619 0 0 ... 1 101348.88 1]

[608 2 0 ... 1 112542.58 0]

[502 0 0 ... 0 113931.57 1]

...

[709 0 0 ... 1 42085.58 1]

[772 1 1 ... 0 92888.52 1]

[792 0 0 ... 0 38190.78 0]]

Escalando y normalizando los features (StandardScaler: (x-u)/s): mean = 0 and standard deviation = 1

# Escalamiento/Normalización de Features (StandardScaler: (x-u)/s)

stdScaler = StandardScaler()

dataset_values[:,0:10] = stdScaler.fit_transform(dataset_values[:,0:10])

# Dataset final normalizado

dataset_final = pd.DataFrame(dataset_values,columns=dataset_columns, dtype = np.float64)

print("\nDataset Final:")

print(dataset_final.describe(include='all'))

print("\n", dataset_final.head())

Dataset Final:

ScoreCrediticio Pais ... SalarioEstimado Abandono

count 1.000000e+04 1.000000e+04 ... 1.000000e+04 10000.000000

mean -4.870326e-16 5.266676e-16 ... -1.580958e-17 0.203700

std 1.000050e+00 1.000050e+00 ... 1.000050e+00 0.402769

min -3.109504e+00 -9.018862e-01 ... -1.740268e+00 0.000000

25% -6.883586e-01 -9.018862e-01 ... -8.535935e-01 0.000000

50% 1.522218e-02 -9.018862e-01 ... 1.802807e-03 0.000000

75% 6.981094e-01 3.065906e-01 ... 8.572431e-01 0.000000

max 2.063884e+00 1.515067e+00 ... 1.737200e+00 1.000000

[8 rows x 11 columns]

ScoreCrediticio Pais ... SalarioEstimado Abandono

0 -0.326221 -0.901886 ... 0.021886 1.0

1 -0.440036 1.515067 ... 0.216534 0.0

2 -1.536794 -0.901886 ... 0.240687 1.0

3 0.501521 -0.901886 ... -0.108918 0.0

4 2.063884 1.515067 ... -0.365276 0.0

[5 rows x 11 columns]

Graficamos la Distribution de los datos y la matriz de Correlación:

# Distribuciones de la data y Correlaciones

print("\n Histogramas:")

plotHistogram(dataset_final)

print("\n Correlaciones:")

plotCorrelations(dataset_final)

Histogramas:

Correlaciones:

Dividiendo data en conjuntos de Entrenamiento y Prueba en 80% para entrenamientos y 20% para pruebas:

# Obteniendo valores a procesar

X = dataset_final.iloc[:, 0:10].values

y = dataset_final.iloc[:, 10].values

# Dividiendo el Dataset en sets de Training y Test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 0)

Despues que ya preparamos la data generamos la red neuronal:

# Importando Keras y Tensorflow

from keras.models import Sequential

from keras.layers import Dense

from keras.initializers import RandomUniform

# Inicializando la Red Neuronal

neural_network = Sequential()

# kernel_initializer Define la forma como se asignará los Pesos iniciales Wi

initial_weights = RandomUniform(minval = -0.5, maxval = 0.5)

# Agregado la Capa de entrada y la primera capa oculta

# 10 Neuronas en la capa de entrada y 8 Neuronas en la primera capa oculta

neural_network.add(Dense(units = 8, kernel_initializer = initial_weights, activation = 'relu', input_dim = 10))

# Agregando capa oculta

neural_network.add(Dense(units = 5, kernel_initializer = initial_weights, activation = 'relu'))

# Agregando capa oculta

neural_network.add(Dense(units = 4, kernel_initializer = initial_weights, activation = 'relu'))

# Agregando capa de salida

neural_network.add(Dense(units = 1, kernel_initializer = initial_weights, activation = 'sigmoid'))

Hemos generado una capa de entrada con 10 neuronas, 8 neuronas para la primera capa oculta, 5 neuronas para la segunda capa oculta, 4 para le tercera capa oculta y la capa de salida con 1 neurona.

Vemos la arquitectura de la red:

# Imprimir Arquitectura de la Red

neural_network.summary()

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) (None, 8) 88

_________________________________________________________________

dense_2 (Dense) (None, 5) 45

_________________________________________________________________

dense_3 (Dense) (None, 4) 24

_________________________________________________________________

dense_4 (Dense) (None, 1) 5

=================================================================

Total params: 162

Trainable params: 162

Non-trainable params: 0

_________________________________________________________________Compilando y Entrenando el modelo utilizando 100 epocas:

# Compilando la Red Neuronal

# optimizer: Algoritmo de optimización | binary_crossentropy = 2 Classes

# loss: error

neural_network.compile(optimizer = 'adam', loss = 'binary_crossentropy', metrics = ['accuracy'])

# Entrenamiento

neural_network.fit(X_train, y_train, batch_size = 32, epochs = 100)

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py:1033: The name tf.assign_add is deprecated. Please use tf.compat.v1.assign_add instead.

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py:1020: The name tf.assign is deprecated. Please use tf.compat.v1.assign instead.

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py:3005: The name tf.Session is deprecated. Please use tf.compat.v1.Session instead.

Epoch 1/100

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py:190: The name tf.get_default_session is deprecated. Please use tf.compat.v1.get_default_session instead.

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py:197: The name tf.ConfigProto is deprecated. Please use tf.compat.v1.ConfigProto instead.

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py:207: The name tf.global_variables is deprecated. Please use tf.compat.v1.global_variables instead.

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py:216: The name tf.is_variable_initialized is deprecated. Please use tf.compat.v1.is_variable_initialized instead.

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py:223: The name tf.variables_initializer is deprecated. Please use tf.compat.v1.variables_initializer instead.

8000/8000 [==============================] - 7s 832us/step - loss: 0.5679 - acc: 0.7696

Epoch 2/100

8000/8000 [==============================] - 1s 174us/step - loss: 0.4454 - acc: 0.8071

Epoch 3/100

8000/8000 [==============================] - 1s 173us/step - loss: 0.4205 - acc: 0.8233

Epoch 4/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.4033 - acc: 0.8350

Epoch 5/100

8000/8000 [==============================] - 1s 180us/step - loss: 0.3872 - acc: 0.8442

Epoch 6/100

8000/8000 [==============================] - 1s 159us/step - loss: 0.3743 - acc: 0.8485

Epoch 7/100

8000/8000 [==============================] - 1s 162us/step - loss: 0.3659 - acc: 0.8480

Epoch 8/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3612 - acc: 0.8498

Epoch 9/100

8000/8000 [==============================] - 1s 162us/step - loss: 0.3585 - acc: 0.8520

Epoch 10/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3564 - acc: 0.8524

Epoch 11/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3548 - acc: 0.8538

Epoch 12/100

8000/8000 [==============================] - 1s 166us/step - loss: 0.3537 - acc: 0.8548

Epoch 13/100

8000/8000 [==============================] - 1s 173us/step - loss: 0.3531 - acc: 0.8533

Epoch 14/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3521 - acc: 0.8556

Epoch 15/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3513 - acc: 0.8536

Epoch 16/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3510 - acc: 0.8551

Epoch 17/100

8000/8000 [==============================] - 1s 160us/step - loss: 0.3497 - acc: 0.8561

Epoch 18/100

8000/8000 [==============================] - 1s 167us/step - loss: 0.3493 - acc: 0.8553

Epoch 19/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3491 - acc: 0.8562

Epoch 20/100

8000/8000 [==============================] - 1s 154us/step - loss: 0.3477 - acc: 0.8559

Epoch 21/100

8000/8000 [==============================] - 1s 178us/step - loss: 0.3474 - acc: 0.8562

Epoch 22/100

8000/8000 [==============================] - 1s 181us/step - loss: 0.3461 - acc: 0.8580

Epoch 23/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3454 - acc: 0.8558

Epoch 24/100

8000/8000 [==============================] - 1s 167us/step - loss: 0.3444 - acc: 0.8569

Epoch 25/100

8000/8000 [==============================] - 1s 182us/step - loss: 0.3428 - acc: 0.8582

Epoch 26/100

8000/8000 [==============================] - 1s 180us/step - loss: 0.3425 - acc: 0.8574

Epoch 27/100

8000/8000 [==============================] - 2s 194us/step - loss: 0.3410 - acc: 0.8591

Epoch 28/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3403 - acc: 0.8600

Epoch 29/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.3396 - acc: 0.8613

Epoch 30/100

8000/8000 [==============================] - 2s 192us/step - loss: 0.3385 - acc: 0.8611

Epoch 31/100

8000/8000 [==============================] - 1s 163us/step - loss: 0.3378 - acc: 0.8609

Epoch 32/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3371 - acc: 0.8611

Epoch 33/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.3366 - acc: 0.8608

Epoch 34/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3361 - acc: 0.8618

Epoch 35/100

8000/8000 [==============================] - 1s 172us/step - loss: 0.3361 - acc: 0.8621

Epoch 36/100

8000/8000 [==============================] - 1s 174us/step - loss: 0.3360 - acc: 0.8626

Epoch 37/100

8000/8000 [==============================] - 1s 162us/step - loss: 0.3353 - acc: 0.8599

Epoch 38/100

8000/8000 [==============================] - 1s 159us/step - loss: 0.3354 - acc: 0.8629

Epoch 39/100

8000/8000 [==============================] - 1s 174us/step - loss: 0.3353 - acc: 0.8622

Epoch 40/100

8000/8000 [==============================] - 1s 156us/step - loss: 0.3345 - acc: 0.8619

Epoch 41/100

8000/8000 [==============================] - 1s 156us/step - loss: 0.3343 - acc: 0.8631

Epoch 42/100

8000/8000 [==============================] - 1s 169us/step - loss: 0.3348 - acc: 0.8619

Epoch 43/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3340 - acc: 0.8606

Epoch 44/100

8000/8000 [==============================] - 1s 183us/step - loss: 0.3342 - acc: 0.8621

Epoch 45/100

8000/8000 [==============================] - 1s 172us/step - loss: 0.3335 - acc: 0.8631

Epoch 46/100

8000/8000 [==============================] - 1s 164us/step - loss: 0.3339 - acc: 0.8624

Epoch 47/100

8000/8000 [==============================] - 1s 166us/step - loss: 0.3333 - acc: 0.8630

Epoch 48/100

8000/8000 [==============================] - 1s 167us/step - loss: 0.3336 - acc: 0.8611

Epoch 49/100

8000/8000 [==============================] - 1s 164us/step - loss: 0.3331 - acc: 0.8630

Epoch 50/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.3333 - acc: 0.8635

Epoch 51/100

8000/8000 [==============================] - 1s 162us/step - loss: 0.3331 - acc: 0.8629

Epoch 52/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3333 - acc: 0.8609

Epoch 53/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.3327 - acc: 0.8625

Epoch 54/100

8000/8000 [==============================] - 1s 177us/step - loss: 0.3325 - acc: 0.8624

Epoch 55/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3327 - acc: 0.8624

Epoch 56/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3325 - acc: 0.8629

Epoch 57/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3330 - acc: 0.8619

Epoch 58/100

8000/8000 [==============================] - 1s 164us/step - loss: 0.3326 - acc: 0.8625

Epoch 59/100

8000/8000 [==============================] - 1s 170us/step - loss: 0.3327 - acc: 0.8624

Epoch 60/100

8000/8000 [==============================] - 1s 177us/step - loss: 0.3322 - acc: 0.8638

Epoch 61/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3323 - acc: 0.8635

Epoch 62/100

8000/8000 [==============================] - 1s 182us/step - loss: 0.3318 - acc: 0.8618

Epoch 63/100

8000/8000 [==============================] - 1s 187us/step - loss: 0.3318 - acc: 0.8640

Epoch 64/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3315 - acc: 0.8640

Epoch 65/100

8000/8000 [==============================] - 1s 177us/step - loss: 0.3317 - acc: 0.8650

Epoch 66/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3318 - acc: 0.8625

Epoch 67/100

8000/8000 [==============================] - 1s 165us/step - loss: 0.3319 - acc: 0.8629

Epoch 68/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3317 - acc: 0.8631

Epoch 69/100

8000/8000 [==============================] - 1s 182us/step - loss: 0.3318 - acc: 0.8649

Epoch 70/100

8000/8000 [==============================] - 1s 169us/step - loss: 0.3316 - acc: 0.8634

Epoch 71/100

8000/8000 [==============================] - 1s 179us/step - loss: 0.3313 - acc: 0.8636

Epoch 72/100

8000/8000 [==============================] - 1s 159us/step - loss: 0.3317 - acc: 0.8642

Epoch 73/100

8000/8000 [==============================] - 1s 173us/step - loss: 0.3310 - acc: 0.8626

Epoch 74/100

8000/8000 [==============================] - 1s 172us/step - loss: 0.3317 - acc: 0.8650

Epoch 75/100

8000/8000 [==============================] - 1s 184us/step - loss: 0.3320 - acc: 0.8629

Epoch 76/100

8000/8000 [==============================] - 1s 180us/step - loss: 0.3315 - acc: 0.8644

Epoch 77/100

8000/8000 [==============================] - 1s 163us/step - loss: 0.3313 - acc: 0.8636

Epoch 78/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3316 - acc: 0.8635

Epoch 79/100

8000/8000 [==============================] - 1s 156us/step - loss: 0.3315 - acc: 0.8639

Epoch 80/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3311 - acc: 0.8625

Epoch 81/100

8000/8000 [==============================] - 1s 179us/step - loss: 0.3310 - acc: 0.8638

Epoch 82/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3308 - acc: 0.8638

Epoch 83/100

8000/8000 [==============================] - 1s 164us/step - loss: 0.3310 - acc: 0.8645

Epoch 84/100

8000/8000 [==============================] - 1s 166us/step - loss: 0.3307 - acc: 0.8645

Epoch 85/100

8000/8000 [==============================] - 1s 164us/step - loss: 0.3307 - acc: 0.8626

Epoch 86/100

8000/8000 [==============================] - 1s 153us/step - loss: 0.3304 - acc: 0.8624

Epoch 87/100

8000/8000 [==============================] - 1s 165us/step - loss: 0.3308 - acc: 0.8658

Epoch 88/100

8000/8000 [==============================] - 1s 182us/step - loss: 0.3306 - acc: 0.8651

Epoch 89/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3305 - acc: 0.8645

Epoch 90/100

8000/8000 [==============================] - 1s 156us/step - loss: 0.3300 - acc: 0.8650

Epoch 91/100

8000/8000 [==============================] - 1s 156us/step - loss: 0.3308 - acc: 0.8640

Epoch 92/100

8000/8000 [==============================] - 1s 177us/step - loss: 0.3305 - acc: 0.8634

Epoch 93/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3304 - acc: 0.8647

Epoch 94/100

8000/8000 [==============================] - 2s 192us/step - loss: 0.3301 - acc: 0.8647

Epoch 95/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3302 - acc: 0.8634

Epoch 96/100

8000/8000 [==============================] - 1s 178us/step - loss: 0.3307 - acc: 0.8644

Epoch 97/100

8000/8000 [==============================] - 1s 160us/step - loss: 0.3306 - acc: 0.8636

Epoch 98/100

8000/8000 [==============================] - 1s 169us/step - loss: 0.3308 - acc: 0.8635

Epoch 99/100

8000/8000 [==============================] - 1s 173us/step - loss: 0.3303 - acc: 0.8632

Epoch 100/100

8000/8000 [==============================] - 1s 156us/step - loss: 0.3299 - acc: 0.8640

<keras.callbacks.History at 0x7f4c83f1f5c0>

Después de las 100 épocas se puede observar que el modelo tiene un Accuracy de 86.4%

Hacemos la predicción con nuestros 50 primeros datos de prueba tomando a los mayores de 0.5 como abandono y a los menores o iguales como no abandono.

# Haciendo predicción de los resultados del Test

y_pred = neural_network.predict(X_test)

y_pred_norm = (y_pred > 0.5)

y_pred_norm = y_pred_norm.astype(int)

y_test = y_test.astype(int)

# 50 primeros resultados a comparar

print("\nPredicciones (50 primeros):")

print("\n\tReal", "\t", "Predicción(N)","\t", "Predicción(O)")

for i in range(50):

print(i, '\t', y_test[i], '\t ', y_pred_norm[i], '\t \t', y_pred[i])

Predicciones (50 primeros):

Real Predicción(N) Predicción(O)

0 0 [0] [0.25591797]

1 1 [0] [0.31653506]

2 0 [0] [0.16617256]

3 0 [0] [0.07125753]

4 0 [0] [0.06709316]

5 1 [1] [0.9561175]

6 0 [0] [0.01982319]

7 0 [0] [0.0801284]

8 1 [0] [0.16028535]

9 1 [1] [0.76239324]

10 0 [0] [0.03265542]

11 0 [0] [0.21288738]

12 0 [0] [0.29622465]

13 0 [0] [0.28117135]

14 1 [1] [0.6112473]

15 1 [0] [0.3089583]

16 0 [0] [0.08254933]

17 0 [0] [0.07191664]

18 0 [0] [0.08332911]

19 0 [0] [0.10069495]

20 0 [1] [0.5985567]

21 0 [0] [0.00851977]

22 0 [0] [0.04273018]

23 0 [0] [0.0951266]

24 0 [0] [0.00786319]

25 0 [0] [0.16710573]

26 0 [0] [0.12770015]

27 0 [0] [0.03628084]

28 0 [0] [0.35433203]

29 0 [0] [0.35582292]

30 0 [0] [0.02590874]

31 1 [0] [0.4530319]

32 0 [0] [0.10775042]

33 0 [0] [0.02804729]

34 0 [0] [0.32664955]

35 0 [0] [0.02045524]

36 0 [0] [0.01842964]

37 0 [0] [0.02650151]

38 0 [0] [0.02766502]

39 0 [0] [0.1354188]

40 0 [0] [0.12443781]

41 1 [0] [0.42804298]

42 1 [0] [0.46373382]

43 0 [0] [0.04600218]

44 0 [1] [0.6296924]

45 0 [0] [0.05090821]

46 0 [0] [0.3721879]

47 0 [0] [0.12022552]

48 0 [1] [0.5840349]

49 0 [0] [0.00435194]Aplicando la Matriz de Confusión:

# Aplicando la Matriz de Confusión

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, y_pred_norm)

print ("\nMatriz de Confusión: \n", cm)

Matriz de Confusión:

[[1499 96]

[ 196 209]]

Graficando la Matriz de Confusión:

plot_confusion_matrix(y_test, y_pred_norm, normalize=False,title="Matriz de Confusión: Abandono de clientes")

Matriz de Confusión sin Normalizar

[[1499 96]

[ 196 209]]

Agregamos una nueva arquitectura:

Entrada => 10

Oculta => 8/4

Salida => 1

# Inicializando la Red Neuronal

neural_network2 = Sequential()

# kernel_initializer Define la forma como se asignará los Pesos iniciales Wi

initial_weights = RandomUniform(minval = -0.5, maxval = 0.5)

neural_network2.add(Dense(units = 8, kernel_initializer = initial_weights, activation = 'relu', input_dim = 10))

neural_network2.add(Dense(units = 4, kernel_initializer = initial_weights, activation = 'relu'))

# Agregando capa de salida

neural_network2.add(Dense(units = 1, kernel_initializer = initial_weights, activation = 'sigmoid'))

# Imprimir Arquitectura de la Red

neural_network2.summary()

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_5 (Dense) (None, 8) 88

_________________________________________________________________

dense_6 (Dense) (None, 4) 36

_________________________________________________________________

dense_7 (Dense) (None, 1) 5

=================================================================

Total params: 129

Trainable params: 129

Non-trainable params: 0

_________________________________________________________________Entrenando la El Modelo 2

# Compilando la Red Neuronal

# optimizer: Algoritmo de optimización | binary_crossentropy = 2 Classes

# loss: error

neural_network2.compile(optimizer = 'adam', loss = 'binary_crossentropy', metrics = ['accuracy'])

# Entrenamiento

neural_network2.fit(X_train, y_train, batch_size = 32, epochs = 100)

Epoch 1/100

8000/8000 [==============================] - 2s 208us/step - loss: 0.6309 - acc: 0.7251

Epoch 2/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.4994 - acc: 0.7960

Epoch 3/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.4579 - acc: 0.7962

Epoch 4/100

8000/8000 [==============================] - 1s 173us/step - loss: 0.4301 - acc: 0.8054

Epoch 5/100

8000/8000 [==============================] - 1s 152us/step - loss: 0.4111 - acc: 0.8207

Epoch 6/100

8000/8000 [==============================] - 1s 142us/step - loss: 0.3908 - acc: 0.8379

Epoch 7/100

8000/8000 [==============================] - 1s 145us/step - loss: 0.3766 - acc: 0.8441

Epoch 8/100

8000/8000 [==============================] - 1s 163us/step - loss: 0.3682 - acc: 0.8490

Epoch 9/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3637 - acc: 0.8508

Epoch 10/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3604 - acc: 0.8521

Epoch 11/100

8000/8000 [==============================] - 1s 167us/step - loss: 0.3585 - acc: 0.8535

Epoch 12/100

8000/8000 [==============================] - 1s 145us/step - loss: 0.3569 - acc: 0.8544

Epoch 13/100

8000/8000 [==============================] - 1s 158us/step - loss: 0.3557 - acc: 0.8538

Epoch 14/100

8000/8000 [==============================] - 1s 145us/step - loss: 0.3547 - acc: 0.8556

Epoch 15/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3541 - acc: 0.8555

Epoch 16/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.3533 - acc: 0.8568

Epoch 17/100

8000/8000 [==============================] - 1s 173us/step - loss: 0.3527 - acc: 0.8568

Epoch 18/100

8000/8000 [==============================] - 1s 167us/step - loss: 0.3519 - acc: 0.8576

Epoch 19/100

8000/8000 [==============================] - 1s 146us/step - loss: 0.3516 - acc: 0.8581

Epoch 20/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3509 - acc: 0.8584

Epoch 21/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3508 - acc: 0.8575

Epoch 22/100

8000/8000 [==============================] - 1s 160us/step - loss: 0.3502 - acc: 0.8586

Epoch 23/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3500 - acc: 0.8585

Epoch 24/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3497 - acc: 0.8579

Epoch 25/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3493 - acc: 0.8591

Epoch 26/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3486 - acc: 0.8569

Epoch 27/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3489 - acc: 0.8579

Epoch 28/100

8000/8000 [==============================] - 1s 158us/step - loss: 0.3486 - acc: 0.8595

Epoch 29/100

8000/8000 [==============================] - 1s 163us/step - loss: 0.3483 - acc: 0.8582

Epoch 30/100

8000/8000 [==============================] - 1s 152us/step - loss: 0.3484 - acc: 0.8575

Epoch 31/100

8000/8000 [==============================] - 1s 145us/step - loss: 0.3476 - acc: 0.8590

Epoch 32/100

8000/8000 [==============================] - 1s 148us/step - loss: 0.3478 - acc: 0.8585

Epoch 33/100

8000/8000 [==============================] - 1s 150us/step - loss: 0.3474 - acc: 0.8578

Epoch 34/100

8000/8000 [==============================] - 1s 158us/step - loss: 0.3472 - acc: 0.8592

Epoch 35/100

8000/8000 [==============================] - 1s 148us/step - loss: 0.3468 - acc: 0.8595

Epoch 36/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3467 - acc: 0.8585

Epoch 37/100

8000/8000 [==============================] - 1s 159us/step - loss: 0.3467 - acc: 0.8590

Epoch 38/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3462 - acc: 0.8579

Epoch 39/100

8000/8000 [==============================] - 1s 159us/step - loss: 0.3462 - acc: 0.8586

Epoch 40/100

8000/8000 [==============================] - 1s 172us/step - loss: 0.3458 - acc: 0.8587

Epoch 41/100

8000/8000 [==============================] - 1s 154us/step - loss: 0.3458 - acc: 0.8598

Epoch 42/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3462 - acc: 0.8587

Epoch 43/100

8000/8000 [==============================] - 1s 162us/step - loss: 0.3454 - acc: 0.8578

Epoch 44/100

8000/8000 [==============================] - 1s 156us/step - loss: 0.3455 - acc: 0.8581

Epoch 45/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3452 - acc: 0.8582

Epoch 46/100

8000/8000 [==============================] - 1s 160us/step - loss: 0.3454 - acc: 0.8579

Epoch 47/100

8000/8000 [==============================] - 1s 153us/step - loss: 0.3453 - acc: 0.8584

Epoch 48/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3452 - acc: 0.8587

Epoch 49/100

8000/8000 [==============================] - 1s 154us/step - loss: 0.3450 - acc: 0.8582

Epoch 50/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3447 - acc: 0.8591

Epoch 51/100

8000/8000 [==============================] - 1s 153us/step - loss: 0.3448 - acc: 0.8586

Epoch 52/100

8000/8000 [==============================] - 1s 156us/step - loss: 0.3445 - acc: 0.8596

Epoch 53/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3443 - acc: 0.8579

Epoch 54/100

8000/8000 [==============================] - 1s 147us/step - loss: 0.3441 - acc: 0.8586

Epoch 55/100

8000/8000 [==============================] - 1s 148us/step - loss: 0.3439 - acc: 0.8590

Epoch 56/100

8000/8000 [==============================] - 1s 148us/step - loss: 0.3436 - acc: 0.8608

Epoch 57/100

8000/8000 [==============================] - 1s 160us/step - loss: 0.3439 - acc: 0.8596

Epoch 58/100

8000/8000 [==============================] - 1s 154us/step - loss: 0.3435 - acc: 0.8591

Epoch 59/100

8000/8000 [==============================] - 1s 159us/step - loss: 0.3433 - acc: 0.8594

Epoch 60/100

8000/8000 [==============================] - 1s 148us/step - loss: 0.3426 - acc: 0.8603

Epoch 61/100

8000/8000 [==============================] - 1s 148us/step - loss: 0.3429 - acc: 0.8595

Epoch 62/100

8000/8000 [==============================] - 1s 151us/step - loss: 0.3427 - acc: 0.8609

Epoch 63/100

8000/8000 [==============================] - 1s 145us/step - loss: 0.3426 - acc: 0.8606

Epoch 64/100

8000/8000 [==============================] - 1s 151us/step - loss: 0.3424 - acc: 0.8608

Epoch 65/100

8000/8000 [==============================] - 1s 151us/step - loss: 0.3420 - acc: 0.8608

Epoch 66/100

8000/8000 [==============================] - 1s 163us/step - loss: 0.3423 - acc: 0.8610

Epoch 67/100

8000/8000 [==============================] - 1s 153us/step - loss: 0.3417 - acc: 0.8598

Epoch 68/100

8000/8000 [==============================] - 1s 165us/step - loss: 0.3418 - acc: 0.8621

Epoch 69/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3416 - acc: 0.8603

Epoch 70/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3414 - acc: 0.8614

Epoch 71/100

8000/8000 [==============================] - 1s 150us/step - loss: 0.3413 - acc: 0.8619

Epoch 72/100

8000/8000 [==============================] - 1s 166us/step - loss: 0.3408 - acc: 0.8609

Epoch 73/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3411 - acc: 0.8625

Epoch 74/100

8000/8000 [==============================] - 1s 165us/step - loss: 0.3409 - acc: 0.8600

Epoch 75/100

8000/8000 [==============================] - 1s 141us/step - loss: 0.3405 - acc: 0.8604

Epoch 76/100

8000/8000 [==============================] - 1s 165us/step - loss: 0.3406 - acc: 0.8605

Epoch 77/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3405 - acc: 0.8616

Epoch 78/100

8000/8000 [==============================] - 1s 163us/step - loss: 0.3404 - acc: 0.8608

Epoch 79/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3399 - acc: 0.8618

Epoch 80/100

8000/8000 [==============================] - 1s 172us/step - loss: 0.3400 - acc: 0.8606

Epoch 81/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3399 - acc: 0.8600

Epoch 82/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3399 - acc: 0.8598

Epoch 83/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3397 - acc: 0.8604

Epoch 84/100

8000/8000 [==============================] - 1s 164us/step - loss: 0.3397 - acc: 0.8613

Epoch 85/100

8000/8000 [==============================] - 1s 166us/step - loss: 0.3394 - acc: 0.8616

Epoch 86/100

8000/8000 [==============================] - 1s 155us/step - loss: 0.3393 - acc: 0.8598

Epoch 87/100

8000/8000 [==============================] - 1s 153us/step - loss: 0.3394 - acc: 0.8611

Epoch 88/100

8000/8000 [==============================] - 1s 159us/step - loss: 0.3393 - acc: 0.8613

Epoch 89/100

8000/8000 [==============================] - 1s 153us/step - loss: 0.3392 - acc: 0.8604

Epoch 90/100

8000/8000 [==============================] - 1s 160us/step - loss: 0.3392 - acc: 0.8606

Epoch 91/100

8000/8000 [==============================] - 1s 153us/step - loss: 0.3391 - acc: 0.8608

Epoch 92/100

8000/8000 [==============================] - 1s 165us/step - loss: 0.3391 - acc: 0.8595

Epoch 93/100

8000/8000 [==============================] - 1s 158us/step - loss: 0.3389 - acc: 0.8619

Epoch 94/100

8000/8000 [==============================] - 1s 144us/step - loss: 0.3392 - acc: 0.8611

Epoch 95/100

8000/8000 [==============================] - 1s 145us/step - loss: 0.3392 - acc: 0.8601

Epoch 96/100

8000/8000 [==============================] - 1s 143us/step - loss: 0.3388 - acc: 0.8596

Epoch 97/100

8000/8000 [==============================] - 1s 146us/step - loss: 0.3390 - acc: 0.8615

Epoch 98/100

8000/8000 [==============================] - 1s 145us/step - loss: 0.3389 - acc: 0.8614

Epoch 99/100

8000/8000 [==============================] - 1s 151us/step - loss: 0.3387 - acc: 0.8592

Epoch 100/100

8000/8000 [==============================] - 1s 162us/step - loss: 0.3386 - acc: 0.8608

<keras.callbacks.History at 0x7f4c84735860>Como podemos ver después de 100 épocas con la nueva arquitectura tenemos 86% de accuracy.

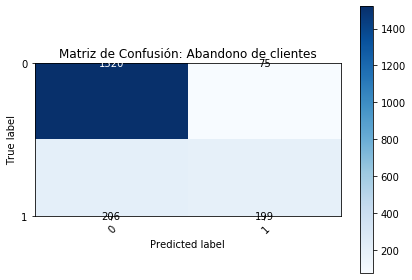

Revisamos la matriz de confusión:

# Haciendo predicción de los resultados del Test

y_pred = neural_network2.predict(X_test)

y_pred_norm = (y_pred > 0.5)

y_pred_norm = y_pred_norm.astype(int)

y_test = y_test.astype(int)

plot_confusion_matrix(y_test, y_pred_norm, normalize=False,title="Matriz de Confusión: Abandono de clientes")

Matriz de Confusión sin Normalizar

[[1520 75]

[ 206 199]]

Agregamos una nueva arquitectura:

Entrada => 10

Oculta => 9/7/5

Salida => 1

# Inicializando la Red Neuronal

neural_network3 = Sequential()

# kernel_initializer Define la forma como se asignará los Pesos iniciales Wi

initial_weights = RandomUniform(minval = -0.5, maxval = 0.5)

neural_network3.add(Dense(units = 9, kernel_initializer = initial_weights, activation = 'relu', input_dim = 10))

neural_network3.add(Dense(units = 7, kernel_initializer = initial_weights, activation = 'relu'))

neural_network3.add(Dense(units = 5, kernel_initializer = initial_weights, activation = 'relu'))

# Agregando capa de salida

neural_network3.add(Dense(units = 1, kernel_initializer = initial_weights, activation = 'sigmoid'))

# Imprimir Arquitectura de la Red

neural_network3.summary()

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_8 (Dense) (None, 9) 99

_________________________________________________________________

dense_9 (Dense) (None, 7) 70

_________________________________________________________________

dense_10 (Dense) (None, 5) 40

_________________________________________________________________

dense_11 (Dense) (None, 1) 6

=================================================================

Total params: 215

Trainable params: 215

Non-trainable params: 0

_________________________________________________________________

# Compilando la Red Neuronal

# optimizer: Algoritmo de optimización | binary_crossentropy = 2 Classes

# loss: error

neural_network3.compile(optimizer = 'adam', loss = 'binary_crossentropy', metrics = ['accuracy'])

# Entrenamiento

neural_network3.fit(X_train, y_train, batch_size = 32, epochs = 100)

Epoch 1/100

8000/8000 [==============================] - 2s 216us/step - loss: 0.5574 - acc: 0.7860

Epoch 2/100

8000/8000 [==============================] - 1s 174us/step - loss: 0.4544 - acc: 0.7969

Epoch 3/100

8000/8000 [==============================] - 1s 160us/step - loss: 0.4302 - acc: 0.8099

Epoch 4/100

8000/8000 [==============================] - 1s 183us/step - loss: 0.4141 - acc: 0.8233

Epoch 5/100

8000/8000 [==============================] - 1s 170us/step - loss: 0.3949 - acc: 0.8340

Epoch 6/100

8000/8000 [==============================] - 1s 183us/step - loss: 0.3783 - acc: 0.8467

Epoch 7/100

8000/8000 [==============================] - 1s 187us/step - loss: 0.3683 - acc: 0.8530

Epoch 8/100

8000/8000 [==============================] - 2s 190us/step - loss: 0.3644 - acc: 0.8540

Epoch 9/100

8000/8000 [==============================] - 1s 174us/step - loss: 0.3611 - acc: 0.8561

Epoch 10/100

8000/8000 [==============================] - 1s 166us/step - loss: 0.3602 - acc: 0.8553

Epoch 11/100

8000/8000 [==============================] - 1s 169us/step - loss: 0.3586 - acc: 0.8560

Epoch 12/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3570 - acc: 0.8566

Epoch 13/100

8000/8000 [==============================] - 1s 172us/step - loss: 0.3565 - acc: 0.8558

Epoch 14/100

8000/8000 [==============================] - 2s 189us/step - loss: 0.3553 - acc: 0.8562

Epoch 15/100

8000/8000 [==============================] - 1s 180us/step - loss: 0.3549 - acc: 0.8551

Epoch 16/100

8000/8000 [==============================] - 1s 174us/step - loss: 0.3536 - acc: 0.8561

Epoch 17/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3533 - acc: 0.8562

Epoch 18/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3523 - acc: 0.8560

Epoch 19/100

8000/8000 [==============================] - 1s 169us/step - loss: 0.3511 - acc: 0.8568

Epoch 20/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.3512 - acc: 0.8566

Epoch 21/100

8000/8000 [==============================] - 1s 170us/step - loss: 0.3509 - acc: 0.8584

Epoch 22/100

8000/8000 [==============================] - 2s 188us/step - loss: 0.3497 - acc: 0.8572

Epoch 23/100

8000/8000 [==============================] - 1s 181us/step - loss: 0.3487 - acc: 0.8570

Epoch 24/100

8000/8000 [==============================] - 1s 177us/step - loss: 0.3490 - acc: 0.8572

Epoch 25/100

8000/8000 [==============================] - 1s 180us/step - loss: 0.3484 - acc: 0.8572

Epoch 26/100

8000/8000 [==============================] - 1s 172us/step - loss: 0.3477 - acc: 0.8589

Epoch 27/100

8000/8000 [==============================] - 1s 162us/step - loss: 0.3467 - acc: 0.8570

Epoch 28/100

8000/8000 [==============================] - 1s 184us/step - loss: 0.3466 - acc: 0.8578

Epoch 29/100

8000/8000 [==============================] - 1s 165us/step - loss: 0.3462 - acc: 0.8584

Epoch 30/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3459 - acc: 0.8576

Epoch 31/100

8000/8000 [==============================] - 1s 178us/step - loss: 0.3457 - acc: 0.8571

Epoch 32/100

8000/8000 [==============================] - 2s 193us/step - loss: 0.3450 - acc: 0.8589

Epoch 33/100

8000/8000 [==============================] - 1s 181us/step - loss: 0.3440 - acc: 0.8581

Epoch 34/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3438 - acc: 0.8585

Epoch 35/100

8000/8000 [==============================] - 1s 187us/step - loss: 0.3437 - acc: 0.8600

Epoch 36/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3431 - acc: 0.8580

Epoch 37/100

8000/8000 [==============================] - 1s 172us/step - loss: 0.3425 - acc: 0.8589

Epoch 38/100

8000/8000 [==============================] - 1s 166us/step - loss: 0.3419 - acc: 0.8596

Epoch 39/100

8000/8000 [==============================] - 1s 174us/step - loss: 0.3416 - acc: 0.8585

Epoch 40/100

8000/8000 [==============================] - 1s 162us/step - loss: 0.3410 - acc: 0.8596

Epoch 41/100

8000/8000 [==============================] - 1s 166us/step - loss: 0.3407 - acc: 0.8601

Epoch 42/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3410 - acc: 0.8589

Epoch 43/100

8000/8000 [==============================] - 1s 182us/step - loss: 0.3401 - acc: 0.8595

Epoch 44/100

8000/8000 [==============================] - 1s 178us/step - loss: 0.3396 - acc: 0.8596

Epoch 45/100

8000/8000 [==============================] - 1s 183us/step - loss: 0.3393 - acc: 0.8586

Epoch 46/100

8000/8000 [==============================] - 2s 192us/step - loss: 0.3388 - acc: 0.8613

Epoch 47/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.3388 - acc: 0.8600

Epoch 48/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3381 - acc: 0.8606

Epoch 49/100

8000/8000 [==============================] - 1s 169us/step - loss: 0.3378 - acc: 0.8621

Epoch 50/100

8000/8000 [==============================] - 1s 180us/step - loss: 0.3374 - acc: 0.8610

Epoch 51/100

8000/8000 [==============================] - 1s 159us/step - loss: 0.3378 - acc: 0.8616

Epoch 52/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3367 - acc: 0.8619

Epoch 53/100

8000/8000 [==============================] - 1s 167us/step - loss: 0.3367 - acc: 0.8622

Epoch 54/100

8000/8000 [==============================] - 1s 184us/step - loss: 0.3361 - acc: 0.8624

Epoch 55/100

8000/8000 [==============================] - 1s 177us/step - loss: 0.3351 - acc: 0.8611

Epoch 56/100

8000/8000 [==============================] - 1s 187us/step - loss: 0.3355 - acc: 0.8625

Epoch 57/100

8000/8000 [==============================] - 1s 186us/step - loss: 0.3348 - acc: 0.8610

Epoch 58/100

8000/8000 [==============================] - 1s 165us/step - loss: 0.3348 - acc: 0.8626

Epoch 59/100

8000/8000 [==============================] - 1s 184us/step - loss: 0.3349 - acc: 0.8630

Epoch 60/100

8000/8000 [==============================] - 1s 174us/step - loss: 0.3346 - acc: 0.8625

Epoch 61/100

8000/8000 [==============================] - 2s 188us/step - loss: 0.3347 - acc: 0.8604

Epoch 62/100

8000/8000 [==============================] - 1s 180us/step - loss: 0.3344 - acc: 0.8634

Epoch 63/100

8000/8000 [==============================] - 1s 182us/step - loss: 0.3340 - acc: 0.8629

Epoch 64/100

8000/8000 [==============================] - 1s 172us/step - loss: 0.3335 - acc: 0.8632

Epoch 65/100

8000/8000 [==============================] - 1s 177us/step - loss: 0.3340 - acc: 0.8631

Epoch 66/100

8000/8000 [==============================] - 2s 199us/step - loss: 0.3333 - acc: 0.8620

Epoch 67/100

8000/8000 [==============================] - 1s 185us/step - loss: 0.3331 - acc: 0.8631

Epoch 68/100

8000/8000 [==============================] - 1s 179us/step - loss: 0.3325 - acc: 0.8636

Epoch 69/100

8000/8000 [==============================] - 1s 185us/step - loss: 0.3325 - acc: 0.8628

Epoch 70/100

8000/8000 [==============================] - 2s 189us/step - loss: 0.3325 - acc: 0.8635

Epoch 71/100

8000/8000 [==============================] - 1s 182us/step - loss: 0.3322 - acc: 0.8626

Epoch 72/100

8000/8000 [==============================] - 1s 182us/step - loss: 0.3323 - acc: 0.8645

Epoch 73/100

8000/8000 [==============================] - 1s 179us/step - loss: 0.3325 - acc: 0.8638

Epoch 74/100

8000/8000 [==============================] - 1s 179us/step - loss: 0.3321 - acc: 0.8642

Epoch 75/100

8000/8000 [==============================] - 1s 185us/step - loss: 0.3320 - acc: 0.8620

Epoch 76/100

8000/8000 [==============================] - 1s 181us/step - loss: 0.3318 - acc: 0.8625

Epoch 77/100

8000/8000 [==============================] - 2s 188us/step - loss: 0.3316 - acc: 0.8641

Epoch 78/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.3319 - acc: 0.8639

Epoch 79/100

8000/8000 [==============================] - 1s 163us/step - loss: 0.3320 - acc: 0.8642

Epoch 80/100

8000/8000 [==============================] - 1s 170us/step - loss: 0.3314 - acc: 0.8631

Epoch 81/100

8000/8000 [==============================] - 1s 180us/step - loss: 0.3313 - acc: 0.8618

Epoch 82/100

8000/8000 [==============================] - 1s 176us/step - loss: 0.3317 - acc: 0.8635

Epoch 83/100

8000/8000 [==============================] - 1s 161us/step - loss: 0.3315 - acc: 0.8644

Epoch 84/100

8000/8000 [==============================] - 1s 181us/step - loss: 0.3315 - acc: 0.8635

Epoch 85/100

8000/8000 [==============================] - 1s 171us/step - loss: 0.3311 - acc: 0.8642

Epoch 86/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3307 - acc: 0.8644

Epoch 87/100

8000/8000 [==============================] - 1s 177us/step - loss: 0.3316 - acc: 0.8644

Epoch 88/100

8000/8000 [==============================] - 1s 179us/step - loss: 0.3309 - acc: 0.8656

Epoch 89/100

8000/8000 [==============================] - 1s 175us/step - loss: 0.3312 - acc: 0.8632

Epoch 90/100

8000/8000 [==============================] - 1s 156us/step - loss: 0.3309 - acc: 0.8639

Epoch 91/100

8000/8000 [==============================] - 1s 185us/step - loss: 0.3308 - acc: 0.8638

Epoch 92/100

8000/8000 [==============================] - 1s 160us/step - loss: 0.3310 - acc: 0.8641

Epoch 93/100

8000/8000 [==============================] - 1s 164us/step - loss: 0.3302 - acc: 0.8638

Epoch 94/100

8000/8000 [==============================] - 1s 182us/step - loss: 0.3312 - acc: 0.8639

Epoch 95/100

8000/8000 [==============================] - 1s 178us/step - loss: 0.3305 - acc: 0.8651

Epoch 96/100

8000/8000 [==============================] - 1s 183us/step - loss: 0.3311 - acc: 0.8644

Epoch 97/100

8000/8000 [==============================] - 1s 169us/step - loss: 0.3303 - acc: 0.8640

Epoch 98/100

8000/8000 [==============================] - 1s 165us/step - loss: 0.3307 - acc: 0.8631

Epoch 99/100

8000/8000 [==============================] - 1s 157us/step - loss: 0.3306 - acc: 0.8645

Epoch 100/100

8000/8000 [==============================] - 1s 168us/step - loss: 0.3307 - acc: 0.8638

<keras.callbacks.History at 0x7f4c84823a20>Se puede observar que se obtiene un acccuracy de 86.3%

# Haciendo predicción de los resultados del Test

y_pred = neural_network3.predict(X_test)

y_pred_norm = (y_pred > 0.5)

y_pred_norm = y_pred_norm.astype(int)

y_test = y_test.astype(int)

plot_confusion_matrix(y_test, y_pred_norm, normalize=False,title="Matriz de Confusión: Abandono de clientes")

Matriz de Confusión sin Normalizar

[[1526 69]

[ 214 191]]