Para este caso usaremos el DataSet de UCI default of credit card clients Data Set

Descripcion de las columnas:

X1: Monto del crédito otorgado ($USD)

X2: Género (1=hombre, 2=mujer)

X3: Educación (1=secundaria incompleta,2=universitario,3=secundaria completa,4=otros)

X4: Estado civil (1=casado,2=soltero,3=otros)

X5: Edad (años)

X6-X11: Historial de pagos (6 últimos meses|-1 = pago en fecha; 1 = retraso de un mes; 2 = retraso de dos meses; . . . 8 = retraso en ocho meses; 9 = retraso en nueve meses o más.)

X12-X17: Monto de deuda mensual (6 últimos meses)

X18-X23: Monto de pago mensual (6 últimos meses)

Y: Sí/No (1 / 0)

Instalamos csvkit para convertir cd xls a csv

!pip install csvkit

Collecting csvkit

Downloading https://files.pythonhosted.org/packages/66/d8/206e4da52bcf9cc29dfa3a93837b14b37ba42f58ccbd22a42a3b3ae0381a/csvkit-1.0.4.tar.gz (3.8MB)

|████████████████████████████████| 3.8MB 9.8MB/s

Collecting agate>=1.6.1

Downloading https://files.pythonhosted.org/packages/92/77/ef675f16486884ff7f77f3cb87aafa3429c6bb869d4d73ee23bf4675e384/agate-1.6.1-py2.py3-none-any.whl (98kB)

|████████████████████████████████| 102kB 13.3MB/s

Collecting agate-excel>=0.2.2

Downloading https://files.pythonhosted.org/packages/a9/cd/ba7ce638900a91f00e6ebaa72c46fc90bfc13cb595071cee82c96057d5d6/agate-excel-0.2.3.tar.gz (153kB)

|████████████████████████████████| 163kB 68.1MB/s

Collecting agate-dbf>=0.2.0

Downloading https://files.pythonhosted.org/packages/cb/05/8bd93fd8f47354e5a31b1ba5876a9498a59fa32166b2e3315da43774adb8/agate-dbf-0.2.1.tar.gz

Collecting agate-sql>=0.5.3

Downloading https://files.pythonhosted.org/packages/4a/fb/796c6e7b625fde74274786da69f08aca5c5eefb891db77344f95ad7b75db/agate-sql-0.5.4.tar.gz

Requirement already satisfied: six>=1.6.1 in /usr/local/lib/python3.6/dist-packages (from csvkit) (1.12.0)

Collecting leather>=0.3.2

Downloading https://files.pythonhosted.org/packages/45/f4/692a53df6708caca1c6d088c6d9003940f164f98bd9df2bdc86233641e9c/leather-0.3.3-py3-none-any.whl

Collecting isodate>=0.5.4

Downloading https://files.pythonhosted.org/packages/9b/9f/b36f7774ff5ea8e428fdcfc4bb332c39ee5b9362ddd3d40d9516a55221b2/isodate-0.6.0-py2.py3-none-any.whl (45kB)

|████████████████████████████████| 51kB 7.8MB/s

Requirement already satisfied: Babel>=2.0 in /usr/local/lib/python3.6/dist-packages (from agate>=1.6.1->csvkit) (2.7.0)

Requirement already satisfied: python-slugify>=1.2.1 in /usr/local/lib/python3.6/dist-packages (from agate>=1.6.1->csvkit) (4.0.0)

Collecting parsedatetime>=2.1

Downloading https://files.pythonhosted.org/packages/e3/b3/02385db13f1f25f04ad7895f35e9fe3960a4b9d53112775a6f7d63f264b6/parsedatetime-2.4.tar.gz (58kB)

|████████████████████████████████| 61kB 9.3MB/s

Collecting pytimeparse>=1.1.5

Downloading https://files.pythonhosted.org/packages/1b/b4/afd75551a3b910abd1d922dbd45e49e5deeb4d47dc50209ce489ba9844dd/pytimeparse-1.1.8-py2.py3-none-any.whl

Requirement already satisfied: xlrd>=0.9.4 in /usr/local/lib/python3.6/dist-packages (from agate-excel>=0.2.2->csvkit) (1.1.0)

Requirement already satisfied: openpyxl>=2.3.0 in /usr/local/lib/python3.6/dist-packages (from agate-excel>=0.2.2->csvkit) (2.5.9)

Collecting dbfread>=2.0.5

Downloading https://files.pythonhosted.org/packages/4c/94/51349e43503e30ed7b4ecfe68a8809cdb58f722c0feb79d18b1f1e36fe74/dbfread-2.0.7-py2.py3-none-any.whl

Requirement already satisfied: sqlalchemy>=1.0.8 in /usr/local/lib/python3.6/dist-packages (from agate-sql>=0.5.3->csvkit) (1.3.10)

Requirement already satisfied: pytz>=2015.7 in /usr/local/lib/python3.6/dist-packages (from Babel>=2.0->agate>=1.6.1->csvkit) (2018.9)

Requirement already satisfied: text-unidecode>=1.3 in /usr/local/lib/python3.6/dist-packages (from python-slugify>=1.2.1->agate>=1.6.1->csvkit) (1.3)

Requirement already satisfied: future in /usr/local/lib/python3.6/dist-packages (from parsedatetime>=2.1->agate>=1.6.1->csvkit) (0.16.0)

Requirement already satisfied: jdcal in /usr/local/lib/python3.6/dist-packages (from openpyxl>=2.3.0->agate-excel>=0.2.2->csvkit) (1.4.1)

Requirement already satisfied: et-xmlfile in /usr/local/lib/python3.6/dist-packages (from openpyxl>=2.3.0->agate-excel>=0.2.2->csvkit) (1.0.1)

Building wheels for collected packages: csvkit, agate-excel, agate-dbf, agate-sql, parsedatetime

Building wheel for csvkit (setup.py) ... done

Created wheel for csvkit: filename=csvkit-1.0.4-cp36-none-any.whl size=41398 sha256=df4d7bd53b3e5e93edb062b7152e9f60c658864fa01c982ca90a3ca8323ab4cc

Stored in directory: /root/.cache/pip/wheels/5f/be/3f/d151aff6c6cce1aa1d56233d68c4b9d38b045bbe5fda018d45

Building wheel for agate-excel (setup.py) ... done

Created wheel for agate-excel: filename=agate_excel-0.2.3-py2.py3-none-any.whl size=6271 sha256=52b17db71ae31e776b1796be5a7638cd3db9dfd9e5c28d7fdb9b6bcc2563fe4f

Stored in directory: /root/.cache/pip/wheels/8a/2f/99/dbf1c6af14192030927240678c0d2176b479dcc44b51a3a6d0

Building wheel for agate-dbf (setup.py) ... done

Created wheel for agate-dbf: filename=agate_dbf-0.2.1-py2.py3-none-any.whl size=3520 sha256=2b992cda1ef453edbaa97f1606c4a7dba93a8ae01d696a9a1178a78528dac04f

Stored in directory: /root/.cache/pip/wheels/06/21/5a/84eb0a6b77a3b3d3254b30aaf993533b2236fd29083e120b24

Building wheel for agate-sql (setup.py) ... done

Created wheel for agate-sql: filename=agate_sql-0.5.4-py2.py3-none-any.whl size=7015 sha256=7378becb9a656da925f1114e0d50745ed9f52648b69532a1e171044d7876c06e

Stored in directory: /root/.cache/pip/wheels/4c/a3/09/e002ac6fb3921eb0aefd511f7aeca7d0b817b4fdb4b441cc7e

Building wheel for parsedatetime (setup.py) ... done

Created wheel for parsedatetime: filename=parsedatetime-2.4-cp36-none-any.whl size=42748 sha256=24c5f79302f665c6bd9d8d3c73b8df42b4efec55ae575c2d1e3cc6973750ba8a

Stored in directory: /root/.cache/pip/wheels/e9/d0/db/aa6af26d9762852afc0c982d96f9b4f29a373205889453555b

Successfully built csvkit agate-excel agate-dbf agate-sql parsedatetime

Installing collected packages: leather, isodate, parsedatetime, pytimeparse, agate, agate-excel, dbfread, agate-dbf, agate-sql, csvkit

Successfully installed agate-1.6.1 agate-dbf-0.2.1 agate-excel-0.2.3 agate-sql-0.5.4 csvkit-1.0.4 dbfread-2.0.7 isodate-0.6.0 leather-0.3.3 parsedatetime-2.4 pytimeparse-1.1.8Validamos que el archivo no exista para descargarlo:

%%bash

if [ ! -f "default_credit_card.csv" ]; then

wget archive.ics.uci.edu/ml/machine-learning-databases/00350/default%20of%20credit%20card%20clients.xls

in2csv "default of credit card clients.xls" > default_credit_card.csv

fi

ls -l

total 9684

-rw-r--r-- 1 root root 4367295 Nov 9 20:19 default_credit_card.csv

-rw-r--r-- 1 root root 5539328 Jan 26 2016 default of credit card clients.xls

drwxr-xr-x 1 root root 4096 Nov 6 16:17 sample_data

--2019-11-09 20:19:21-- http://archive.ics.uci.edu/ml/machine-learning-databases/00350/default%20of%20credit%20card%20clients.xls

Resolving archive.ics.uci.edu (archive.ics.uci.edu)... 128.195.10.252

Connecting to archive.ics.uci.edu (archive.ics.uci.edu)|128.195.10.252|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 5539328 (5.3M) [application/x-httpd-php]

Saving to: ‘default of credit card clients.xls’

0K .......... .......... .......... .......... .......... 0% 167K 32s

50K .......... .......... .......... .......... .......... 1% 353K 23s

100K .......... .......... .......... .......... .......... 2% 191M 15s

150K .......... .......... .......... .......... .......... 3% 261M 12s

200K .......... .......... .......... .......... .......... 4% 357K 12s

250K .......... .......... .......... .......... .......... 5% 40.6M 10s

300K .......... .......... .......... .......... .......... 6% 362K 10s

350K .......... .......... .......... .......... .......... 7% 40.3M 9s

400K .......... .......... .......... .......... .......... 8% 38.1M 8s

450K .......... .......... .......... .......... .......... 9% 37.0M 7s

500K .......... .......... .......... .......... .......... 10% 374K 8s

550K .......... .......... .......... .......... .......... 11% 35.9M 7s

600K .......... .......... .......... .......... .......... 12% 64.5M 6s

650K .......... .......... .......... .......... .......... 12% 63.4M 6s

700K .......... .......... .......... .......... .......... 13% 64.3M 5s

750K .......... .......... .......... .......... .......... 14% 66.8M 5s

800K .......... .......... .......... .......... .......... 15% 77.3M 5s

850K .......... .......... .......... .......... .......... 16% 95.4M 4s

900K .......... .......... .......... .......... .......... 17% 77.0M 4s

950K .......... .......... .......... .......... .......... 18% 377K 4s

1000K .......... .......... .......... .......... .......... 19% 83.8M 4s

1050K .......... .......... .......... .......... .......... 20% 85.5M 4s

1100K .......... .......... .......... .......... .......... 21% 82.7M 4s

1150K .......... .......... .......... .......... .......... 22% 91.6M 4s

1200K .......... .......... .......... .......... .......... 23% 98.0M 3s

1250K .......... .......... .......... .......... .......... 24% 73.5M 3s

1300K .......... .......... .......... .......... .......... 24% 85.6M 3s

1350K .......... .......... .......... .......... .......... 25% 79.0M 3s

1400K .......... .......... .......... .......... .......... 26% 465M 3s

1450K .......... .......... .......... .......... .......... 27% 114M 3s

1500K .......... .......... .......... .......... .......... 28% 126M 2s

1550K .......... .......... .......... .......... .......... 29% 101M 2s

1600K .......... .......... .......... .......... .......... 30% 76.2M 2s

1650K .......... .......... .......... .......... .......... 31% 51.6M 2s

1700K .......... .......... .......... .......... .......... 32% 89.7M 2s

1750K .......... .......... .......... .......... .......... 33% 383K 2s

1800K .......... .......... .......... .......... .......... 34% 64.3M 2s

1850K .......... .......... .......... .......... .......... 35% 102M 2s

1900K .......... .......... .......... .......... .......... 36% 111M 2s

1950K .......... .......... .......... .......... .......... 36% 123M 2s

2000K .......... .......... .......... .......... .......... 37% 116M 2s

2050K .......... .......... .......... .......... .......... 38% 105M 2s

2100K .......... .......... .......... .......... .......... 39% 112M 2s

2150K .......... .......... .......... .......... .......... 40% 110M 2s

2200K .......... .......... .......... .......... .......... 41% 123M 2s

2250K .......... .......... .......... .......... .......... 42% 115M 2s

2300K .......... .......... .......... .......... .......... 43% 47.6M 1s

2350K .......... .......... .......... .......... .......... 44% 135M 1s

2400K .......... .......... .......... .......... .......... 45% 110M 1s

2450K .......... .......... .......... .......... .......... 46% 113M 1s

2500K .......... .......... .......... .......... .......... 47% 114M 1s

2550K .......... .......... .......... .......... .......... 48% 109M 1s

2600K .......... .......... .......... .......... .......... 48% 68.8M 1s

2650K .......... .......... .......... .......... .......... 49% 86.0M 1s

2700K .......... .......... .......... .......... .......... 50% 127M 1s

2750K .......... .......... .......... .......... .......... 51% 88.9M 1s

2800K .......... .......... .......... .......... .......... 52% 146M 1s

2850K .......... .......... .......... .......... .......... 53% 112M 1s

2900K .......... .......... .......... .......... .......... 54% 105M 1s

2950K .......... .......... .......... .......... .......... 55% 142M 1s

3000K .......... .......... .......... .......... .......... 56% 89.8M 1s

3050K .......... .......... .......... .......... .......... 57% 117M 1s

3100K .......... .......... .......... .......... .......... 58% 133M 1s

3150K .......... .......... .......... .......... .......... 59% 101M 1s

3200K .......... .......... .......... .......... .......... 60% 130M 1s

3250K .......... .......... .......... .......... .......... 61% 98.8M 1s

3300K .......... .......... .......... .......... .......... 61% 55.4M 1s

3350K .......... .......... .......... .......... .......... 62% 135M 1s

3400K .......... .......... .......... .......... .......... 63% 104M 1s

3450K .......... .......... .......... .......... .......... 64% 407K 1s

3500K .......... .......... .......... .......... .......... 65% 168M 1s

3550K .......... .......... .......... .......... .......... 66% 84.5M 1s

3600K .......... .......... .......... .......... .......... 67% 116M 1s

3650K .......... .......... .......... .......... .......... 68% 118M 1s

3700K .......... .......... .......... .......... .......... 69% 101M 1s

3750K .......... .......... .......... .......... .......... 70% 124M 1s

3800K .......... .......... .......... .......... .......... 71% 101M 1s

3850K .......... .......... .......... .......... .......... 72% 107M 0s

3900K .......... .......... .......... .......... .......... 73% 126M 0s

3950K .......... .......... .......... .......... .......... 73% 110M 0s

4000K .......... .......... .......... .......... .......... 74% 113M 0s

4050K .......... .......... .......... .......... .......... 75% 135M 0s

4100K .......... .......... .......... .......... .......... 76% 76.4M 0s

4150K .......... .......... .......... .......... .......... 77% 122M 0s

4200K .......... .......... .......... .......... .......... 78% 161M 0s

4250K .......... .......... .......... .......... .......... 79% 95.6M 0s

4300K .......... .......... .......... .......... .......... 80% 139M 0s

4350K .......... .......... .......... .......... .......... 81% 101M 0s

4400K .......... .......... .......... .......... .......... 82% 114M 0s

4450K .......... .......... .......... .......... .......... 83% 113M 0s

4500K .......... .......... .......... .......... .......... 84% 117M 0s

4550K .......... .......... .......... .......... .......... 85% 109M 0s

4600K .......... .......... .......... .......... .......... 85% 108M 0s

4650K .......... .......... .......... .......... .......... 86% 52.8M 0s

4700K .......... .......... .......... .......... .......... 87% 62.1M 0s

4750K .......... .......... .......... .......... .......... 88% 67.7M 0s

4800K .......... .......... .......... .......... .......... 89% 98.2M 0s

4850K .......... .......... .......... .......... .......... 90% 107M 0s

4900K .......... .......... .......... .......... .......... 91% 103M 0s

4950K .......... .......... .......... .......... .......... 92% 112M 0s

5000K .......... .......... .......... .......... .......... 93% 103M 0s

5050K .......... .......... .......... .......... .......... 94% 136M 0s

5100K .......... .......... .......... .......... .......... 95% 85.9M 0s

5150K .......... .......... .......... .......... .......... 96% 158M 0s

5200K .......... .......... .......... .......... .......... 97% 54.8M 0s

5250K .......... .......... .......... .......... .......... 97% 122M 0s

5300K .......... .......... .......... .......... .......... 98% 96.4M 0s

5350K .......... .......... .......... .......... .......... 99% 153M 0s

5400K ......... 100% 83.4M=1.3s

2019-11-09 20:19:23 (4.09 MB/s) - ‘default of credit card clients.xls’ saved [5539328/5539328]

/usr/local/lib/python3.6/dist-packages/agate/utils.py:276: UnnamedColumnWarning: Column 0 has no name. Using "a".Creamos funciones necesarias que usaremos más adelante:

# Funciones

import numpy as np

from sklearn.metrics import confusion_matrix

from sklearn.utils.multiclass import unique_labels

from sklearn.externals import joblib

import seaborn as sns

import matplotlib.pyplot as plt

def plot_confusion_matrix(y_true, y_pred,

normalize=False,

title=None):

"""

Esta función imprime y traza la matriz de confusión.

La normalización se puede aplicar configurando `normalize=True`.

"""

if not title:

if normalize:

title = 'Matriz de Confusión Normalizada'

else:

title = 'Matriz de Confusión sin Normalizar'

# Calculando la Matriz de Confusion

cm = confusion_matrix(y_true, y_pred)

# solo usar las etiquetas que se tienen en la data

classes = unique_labels(y_true, y_pred)

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

print("Matriz de Confusión Normalizada")

else:

print('Matriz de Confusión sin Normalizar')

print(cm)

fig, ax = plt.subplots()

im = ax.imshow(cm, interpolation='nearest', cmap=plt.cm.Blues)

ax.figure.colorbar(im, ax=ax)

ax.grid(linewidth=.0)

# Queremos mostrar todos los puntos...

ax.set(xticks=np.arange(cm.shape[1]),

yticks=np.arange(cm.shape[0]),

# ... etiquetando la lista de datos

xticklabels=classes, yticklabels=classes,

title=title,

ylabel='True label',

xlabel='Predicted label')

# rotando las etiquedas de los puntos.

plt.setp(ax.get_xticklabels(), rotation=45, ha="right",rotation_mode="anchor")

# Loop over data dimensions and create text annotations.

fmt = '.2f' if normalize else 'd'

thresh = cm.max() / 2.

for i in range(cm.shape[0]):

for j in range(cm.shape[1]):

ax.text(j, i, format(cm[i, j], fmt),

ha="center", va="center",

color="white" if cm[i, j] > thresh else "black")

fig.tight_layout()

plt.show()

return ax

def saveFile(object_to_save, scaler_filename):

joblib.dump(object_to_save, scaler_filename)

def loadFile(scaler_filename):

return joblib.load(scaler_filename)

def plotHistogram(dataset_final):

dataset_final.hist(figsize=(20,14), edgecolor="black", bins=40)

plt.show()

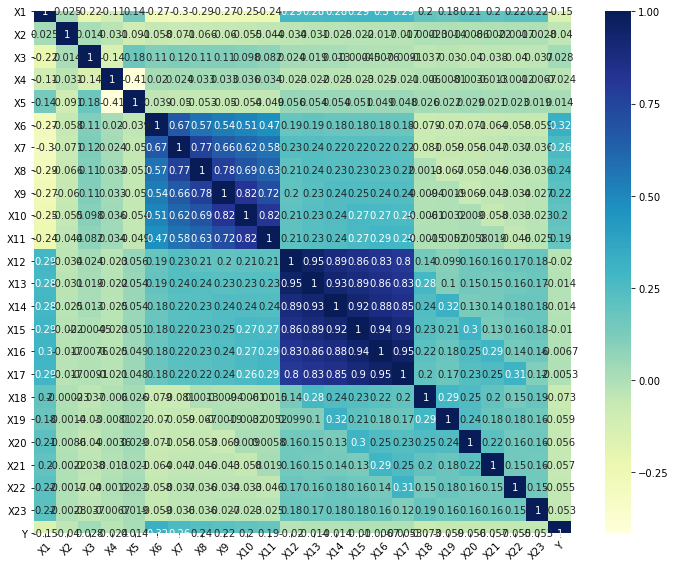

def plotCorrelations(dataset_final):

fig, ax = plt.subplots(figsize=(10,8)) # size in inches

g = sns.heatmap(dataset_final.corr(), annot=True, cmap="YlGnBu", ax=ax)

g.set_yticklabels(g.get_yticklabels(), rotation = 0)

g.set_xticklabels(g.get_xticklabels(), rotation = 45)

fig.tight_layout()

plt.show()

Cargamos y limpiamos la data quitamos la segunda fila innecesaria y volvemos a castear los tipos de datos de las columnas:

# Importando librerías

import pandas as pd

import numpy as np

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

# Importando Datasets

dataset_csv = pd.read_csv('default_credit_card.csv')

# Columnas de la data

print ("\nColumnas del DataSet: ")

print (dataset_csv.columns)

print ("\nDataset Total: ")

print("\n",dataset_csv.head())

# Delete the first five rows using iloc selector

dataset = dataset_csv.iloc[2:,]

dataset = dataset.iloc[:,1:25]

dataset_columns = dataset.columns

dataset_values = dataset.values

print ("\nDataset reducido: ")

print("\n",dataset.head())

# Describir la data original

print ("\nDataset original:\n", dataset.describe(include='all'))

#Casteando las columnas

dataset.X1 = dataset.X1.astype(np.number)

dataset.X2 = dataset.X2.astype(np.number)

dataset.X3 = dataset.X3.astype(np.number)

dataset.X4 = dataset.X4.astype(np.number)

dataset.X5 = dataset.X5.astype(np.number)

dataset.X6 = dataset.X6.astype(np.number)

dataset.X7 = dataset.X7.astype(np.number)

dataset.X8 = dataset.X8.astype(np.number)

dataset.X9 = dataset.X9.astype(np.number)

dataset.X10 = dataset.X10.astype(np.number)

dataset.X11 = dataset.X11.astype(np.number)

dataset.X12 = dataset.X12.astype(np.number)

dataset.X13 = dataset.X13.astype(np.number)

dataset.X14 = dataset.X14.astype(np.number)

dataset.X15 = dataset.X15.astype(np.number)

dataset.X16 = dataset.X16.astype(np.number)

dataset.X17 = dataset.X17.astype(np.number)

dataset.X18 = dataset.X18.astype(np.number)

dataset.X19 = dataset.X19.astype(np.number)

dataset.X20 = dataset.X20.astype(np.number)

dataset.X21 = dataset.X21.astype(np.number)

dataset.X22 = dataset.X22.astype(np.number)

dataset.X23 = dataset.X23.astype(np.number)

dataset.Y = dataset.Y.str.replace('.0', '').astype(int)

# Revisamos los tipos de datos de las Columnas

print ("\nTipos de Columnas del Dataset: ")

print(dataset.dtypes)

Columnas del DataSet:

Index(['a', 'X1', 'X2', 'X3', 'X4', 'X5', 'X6', 'X7', 'X8', 'X9', 'X10', 'X11',

'X12', 'X13', 'X14', 'X15', 'X16', 'X17', 'X18', 'X19', 'X20', 'X21',

'X22', 'X23', 'Y'],

dtype='object')

Dataset Total:

a X1 X2 ... X22 X23 Y

0 ID LIMIT_BAL SEX ... PAY_AMT5 PAY_AMT6 default payment next month

1 1.0 20000.0 2.0 ... 0.0 0.0 1.0

2 2.0 120000.0 2.0 ... 0.0 2000.0 1.0

3 3.0 90000.0 2.0 ... 1000.0 5000.0 0.0

4 4.0 50000.0 2.0 ... 1069.0 1000.0 0.0

[5 rows x 25 columns]

Dataset reducido:

X1 X2 X3 X4 X5 ... X20 X21 X22 X23 Y

2 120000.0 2.0 2.0 2.0 26.0 ... 1000.0 1000.0 0.0 2000.0 1.0

3 90000.0 2.0 2.0 2.0 34.0 ... 1000.0 1000.0 1000.0 5000.0 0.0

4 50000.0 2.0 2.0 1.0 37.0 ... 1200.0 1100.0 1069.0 1000.0 0.0

5 50000.0 1.0 2.0 1.0 57.0 ... 10000.0 9000.0 689.0 679.0 0.0

6 50000.0 1.0 1.0 2.0 37.0 ... 657.0 1000.0 1000.0 800.0 0.0

[5 rows x 24 columns]

Dataset original:

X1 X2 X3 X4 X5 ... X20 X21 X22 X23 Y

count 29999 29999 29999 29999 29999 ... 29999 29999 29999 29999 29999

unique 81 2 7 4 56 ... 7518 6937 6897 6939 2

top 50000.0 2.0 2.0 2.0 29.0 ... 0.0 0.0 0.0 0.0 0.0

freq 3365 18111 14029 15964 1605 ... 5967 6407 6702 7172 23364

[4 rows x 24 columns]

Tipos de Columnas del Dataset:

X1 float64

X2 float64

X3 float64

X4 float64

X5 float64

X6 float64

X7 float64

X8 float64

X9 float64

X10 float64

X11 float64

X12 float64

X13 float64

X14 float64

X15 float64

X16 float64

X17 float64

X18 float64

X19 float64

X20 float64

X21 float64

X22 float64

X23 float64

Y int64

dtype: objectEscalamos y normalizamos los valores:

# Escalamiento/Normalización de Features (StandardScaler: (x-u)/s)

stdScaler = StandardScaler()

dataset_values[:,0:23] = stdScaler.fit_transform(dataset_values[:,0:23])

# Dataset final normalizado

dataset_final = pd.DataFrame(dataset_values,columns=dataset_columns, dtype=np.float64)

print ("\nDataset Final:")

print(dataset_final.describe(include='all'))

print("\n", dataset_final.head())

Dataset Final:

X1 X2 ... X23 Y

count 2.999900e+04 2.999900e+04 ... 2.999900e+04 29999.000000

mean 9.979387e-16 4.700634e-15 ... 6.060447e-17 0.221174

std 1.000017e+00 1.000017e+00 ... 1.000017e+00 0.415044

min -1.213838e+00 -1.234289e+00 ... -2.933874e-01 0.000000

25% -9.055406e-01 -1.234289e+00 ... -2.867497e-01 0.000000

50% -2.118715e-01 8.101831e-01 ... -2.090108e-01 0.000000

75% 5.588719e-01 8.101831e-01 ... -6.838310e-02 0.000000

max 6.416522e+00 8.101831e-01 ... 2.944464e+01 1.000000

[8 rows x 24 columns]

X1 X2 X3 X4 ... X21 X22 X23 Y

0 -0.366020 0.810183 0.185831 0.858524 ... -0.244236 -0.314142 -0.180885 1.0

1 -0.597243 0.810183 0.185831 0.858524 ... -0.244236 -0.248689 -0.012132 0.0

2 -0.905541 0.810183 0.185831 -1.057332 ... -0.237853 -0.244173 -0.237136 0.0

3 -0.905541 -1.234289 0.185831 -1.057332 ... 0.266419 -0.269045 -0.255193 0.0

4 -0.905541 -1.234289 -1.079434 0.858524 ... -0.244236 -0.248689 -0.248387 0.0

[5 rows x 24 columns]Graficando datos:

# Distribuciones de la data y Correlaciones

print("\n Histogramas:")

plotHistogram(dataset_final)

print("\n Correlaciones:")

plotCorrelations(dataset_final)

Separamos los predictores del objetivo y partimos la data en 80% / 20%

# Obteniendo valores a procesar

X = dataset_final.iloc[:, 0:23].values

y = dataset_final.iloc[:, 23].values

# Dividiendo el Dataset en sets de Training y Test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 0)

Creamos una arquitetura:

Entrada => 23

Oculta => 20 / 10 / 5

Salida => 1

# Importando Keras y Tensorflow

from keras.models import Sequential

from keras.layers import Dense

from keras.initializers import RandomUniform

# Inicializando la Red Neuronal

neural_network = Sequential()

# kernel_initializer Define la forma como se asignará los Pesos iniciales Wi

initial_weights = RandomUniform(minval = -0.5, maxval = 0.5)

# Agregado la Capa de entrada y la primera capa oculta

# 10 Neuronas en la capa de entrada y 8 Neuronas en la primera capa oculta

neural_network.add(Dense(units = 20, kernel_initializer = initial_weights, activation = 'relu', input_dim = 23))

# Agregando capa oculta

neural_network.add(Dense(units = 10, kernel_initializer = initial_weights, activation = 'relu'))

# Agregando capa oculta

neural_network.add(Dense(units = 5, kernel_initializer = initial_weights, activation = 'relu'))

# Agregando capa de salida

neural_network.add(Dense(units = 1, kernel_initializer = initial_weights, activation = 'sigmoid'))

Imprimimos la aquitectura de la Red:

neural_network.summary()

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) (None, 20) 480

_________________________________________________________________

dense_2 (Dense) (None, 10) 210

_________________________________________________________________

dense_3 (Dense) (None, 5) 55

_________________________________________________________________

dense_4 (Dense) (None, 1) 6

=================================================================

Total params: 751

Trainable params: 751

Non-trainable params: 0

_________________________________________________________________

Entrenamos el modelo en 100 épocas:

# Compilando la Red Neuronal

# optimizer: Algoritmo de optimización | binary_crossentropy = 2 Classes

# loss: error

neural_network.compile(optimizer = 'adam', loss = 'binary_crossentropy', metrics = ['accuracy'])

# Entrenamiento

neural_network.fit(X_train, y_train, batch_size = 32, epochs = 100)

Epoch 1/100

23999/23999 [==============================] - 3s 133us/step - loss: 0.4300 - acc: 0.8207

Epoch 2/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4291 - acc: 0.8207

Epoch 3/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4281 - acc: 0.8207

Epoch 4/100

23999/23999 [==============================] - 3s 118us/step - loss: 0.4276 - acc: 0.8212

Epoch 5/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4271 - acc: 0.8210

Epoch 6/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4265 - acc: 0.8208

Epoch 7/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4254 - acc: 0.8213

Epoch 8/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4251 - acc: 0.8212

Epoch 9/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4251 - acc: 0.8216

Epoch 10/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4241 - acc: 0.8215

Epoch 11/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4241 - acc: 0.8213

Epoch 12/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4237 - acc: 0.8228

Epoch 13/100

23999/23999 [==============================] - 3s 117us/step - loss: 0.4235 - acc: 0.8221

Epoch 14/100

23999/23999 [==============================] - 3s 119us/step - loss: 0.4229 - acc: 0.8236

Epoch 15/100

23999/23999 [==============================] - 3s 119us/step - loss: 0.4226 - acc: 0.8220

Epoch 16/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4222 - acc: 0.8229

Epoch 17/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4221 - acc: 0.8220

Epoch 18/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4218 - acc: 0.8229

Epoch 19/100

23999/23999 [==============================] - 3s 119us/step - loss: 0.4215 - acc: 0.8231

Epoch 20/100

23999/23999 [==============================] - 3s 118us/step - loss: 0.4212 - acc: 0.8217

Epoch 21/100

23999/23999 [==============================] - 3s 117us/step - loss: 0.4211 - acc: 0.8229

Epoch 22/100

23999/23999 [==============================] - 3s 117us/step - loss: 0.4209 - acc: 0.8231

Epoch 23/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4205 - acc: 0.8234

Epoch 24/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4206 - acc: 0.8231

Epoch 25/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4203 - acc: 0.8229

Epoch 26/100

23999/23999 [==============================] - 3s 118us/step - loss: 0.4199 - acc: 0.8224

Epoch 27/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4197 - acc: 0.8225

Epoch 28/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4197 - acc: 0.8222

Epoch 29/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4199 - acc: 0.8237

Epoch 30/100

23999/23999 [==============================] - 3s 117us/step - loss: 0.4192 - acc: 0.8234

Epoch 31/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4190 - acc: 0.8231

Epoch 32/100

23999/23999 [==============================] - 3s 118us/step - loss: 0.4187 - acc: 0.8233

Epoch 33/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4185 - acc: 0.8227

Epoch 34/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4182 - acc: 0.8234

Epoch 35/100

23999/23999 [==============================] - 3s 120us/step - loss: 0.4181 - acc: 0.8226

Epoch 36/100

23999/23999 [==============================] - 3s 121us/step - loss: 0.4180 - acc: 0.8228

Epoch 37/100

23999/23999 [==============================] - 3s 121us/step - loss: 0.4180 - acc: 0.8229

Epoch 38/100

23999/23999 [==============================] - 3s 121us/step - loss: 0.4174 - acc: 0.8234

Epoch 39/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4176 - acc: 0.8230

Epoch 40/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4173 - acc: 0.8232

Epoch 41/100

23999/23999 [==============================] - 3s 119us/step - loss: 0.4170 - acc: 0.8241

Epoch 42/100

23999/23999 [==============================] - 3s 122us/step - loss: 0.4172 - acc: 0.8236

Epoch 43/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4167 - acc: 0.8235

Epoch 44/100

23999/23999 [==============================] - 3s 111us/step - loss: 0.4165 - acc: 0.8230

Epoch 45/100

23999/23999 [==============================] - 3s 111us/step - loss: 0.4163 - acc: 0.8245

Epoch 46/100

23999/23999 [==============================] - 3s 117us/step - loss: 0.4161 - acc: 0.8245

Epoch 47/100

23999/23999 [==============================] - 3s 117us/step - loss: 0.4159 - acc: 0.8244

Epoch 48/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4161 - acc: 0.8236

Epoch 49/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4157 - acc: 0.8250

Epoch 50/100

23999/23999 [==============================] - 3s 117us/step - loss: 0.4161 - acc: 0.8237

Epoch 51/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4158 - acc: 0.8232

Epoch 52/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4154 - acc: 0.8247

Epoch 53/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4159 - acc: 0.8237

Epoch 54/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4154 - acc: 0.8249

Epoch 55/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4150 - acc: 0.8251

Epoch 56/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4153 - acc: 0.8240

Epoch 57/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4153 - acc: 0.8250

Epoch 58/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4151 - acc: 0.8252

Epoch 59/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4145 - acc: 0.8245

Epoch 60/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4147 - acc: 0.8245

Epoch 61/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4146 - acc: 0.8253

Epoch 62/100

23999/23999 [==============================] - 3s 117us/step - loss: 0.4146 - acc: 0.8251

Epoch 63/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4140 - acc: 0.8251

Epoch 64/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4142 - acc: 0.8242

Epoch 65/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4142 - acc: 0.8246

Epoch 66/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4137 - acc: 0.8247

Epoch 67/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4141 - acc: 0.8249

Epoch 68/100

23999/23999 [==============================] - 3s 117us/step - loss: 0.4140 - acc: 0.8260

Epoch 69/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4137 - acc: 0.8248

Epoch 70/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4139 - acc: 0.8252

Epoch 71/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4131 - acc: 0.8251

Epoch 72/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4133 - acc: 0.8253

Epoch 73/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4135 - acc: 0.8254

Epoch 74/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4128 - acc: 0.8245

Epoch 75/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4134 - acc: 0.8254

Epoch 76/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4132 - acc: 0.8245

Epoch 77/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4129 - acc: 0.8249

Epoch 78/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4129 - acc: 0.8255

Epoch 79/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4130 - acc: 0.8252

Epoch 80/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4130 - acc: 0.8253

Epoch 81/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4125 - acc: 0.8259

Epoch 82/100

23999/23999 [==============================] - 3s 116us/step - loss: 0.4123 - acc: 0.8257

Epoch 83/100

23999/23999 [==============================] - 3s 115us/step - loss: 0.4126 - acc: 0.8252

Epoch 84/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4124 - acc: 0.8255

Epoch 85/100

23999/23999 [==============================] - 3s 111us/step - loss: 0.4120 - acc: 0.8254

Epoch 86/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4123 - acc: 0.8256

Epoch 87/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4124 - acc: 0.8254

Epoch 88/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4117 - acc: 0.8263

Epoch 89/100

23999/23999 [==============================] - 3s 114us/step - loss: 0.4118 - acc: 0.8252

Epoch 90/100

23999/23999 [==============================] - 3s 118us/step - loss: 0.4121 - acc: 0.8253

Epoch 91/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4118 - acc: 0.8256

Epoch 92/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4115 - acc: 0.8264

Epoch 93/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4113 - acc: 0.8255

Epoch 94/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4120 - acc: 0.8256

Epoch 95/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4113 - acc: 0.8260

Epoch 96/100

23999/23999 [==============================] - 3s 111us/step - loss: 0.4114 - acc: 0.8261

Epoch 97/100

23999/23999 [==============================] - 3s 111us/step - loss: 0.4114 - acc: 0.8255

Epoch 98/100

23999/23999 [==============================] - 3s 113us/step - loss: 0.4113 - acc: 0.8267

Epoch 99/100

23999/23999 [==============================] - 3s 112us/step - loss: 0.4112 - acc: 0.8254

Epoch 100/100

23999/23999 [==============================] - 3s 111us/step - loss: 0.4112 - acc: 0.8253

<keras.callbacks.History at 0x7fd8588fa2e8>Obtenemos un accuracy de 82.5%

Realizamos las predicciones con los datos de Test y generamos la matriz de confusión:

# Haciendo predicción de los resultados del Test

y_pred = neural_network.predict(X_test)

y_pred_norm = (y_pred > 0.5)

y_pred_norm = y_pred_norm.astype(int)

y_test = y_test.astype(int)

plot_confusion_matrix(y_test, y_pred_norm, normalize=False,title="Matriz de Confusión: Incumplimiento de Pagos de Tarjetas de Credito")

Matriz de Confusión sin Normalizar

[[4458 235]

[ 867 440]]